Deep Engineering #29: Imran Ahmad on Architecting Real-World AI Systems

What it takes to move AI from demo to durable systems—architecture, agents, and ops.

Teach Someone You Love Python 🐍| Open Python 101 for Beginners

Have a friend or teammate who keeps saying they want to learn Python?

On Thu, Dec 12, 9:00–10:30am ET, Python Illustrated authors Maaike and Imke van Putten are running a free, virtual, live beginner intro to Python in an online editor—no installs, no setup, just Hello World, simple logic, a small mini-project, and Q&A with a book giveaway.

Spread the word—and a bit of holiday cheer—from Deep Engineering.

✍️From the editor’s desk,

In his keynote at AWS re:Invent 2025, AWS CEO Matt Garman said that AI agents will ultimately have a bigger impact than the internet or cloud computing themselves. At the same event, AWS introduced an Agentic AI Specialization for partners, with CrowdStrike highlighted as an inaugural launch partner securing agentic workloads on AWS. Enterprises are now being asked to run AI—and agentic AI—in production, at scale, on real architectures.

What does it take to architect AI systems that meet real constraints on cost, reliability, security, sustainability, and operations?

To explore that, we’ve collaborated again with Imran Ahmad, data scientist, educator, and author focused on algorithms, AI, and cloud computing. He leads machine learning projects for the Canadian government, teaches at Carleton University, and is an authorized instructor for AWS and Google Cloud. With Packt, he has authored several books including Architecting AI Software Systems (2025, with co-author Richard D Avila).

Here’s what’s in today’s issue:

Feature: “Architecting AI Systems for the Real World” - Key Insights from my conversation with Imran Ahmad

The complete “Chapter 1: Fundamentals of AI System Architecture” from Architecting AI Software Systems by Richard D Avila and Imran Ahmad.

The full Deep Engineering interview with Imran Ahmad, covering agentic AI, RAG vs. agentic RAG, UX, cross-functional teams, and how the architect’s role is changing.

And In today’s Tech Briefs, we look at MCP’s November 2025 spec and the security research landing alongside it, Fara-7B as Microsoft’s on-device agentic model for direct UI control, vLLM-Omni as a new framework for efficient omni-modal model serving in production, Anthropic’s internal study on how engineers actually use Claude and Claude Code in daily work, and more.

Let us begin.

Architecting AI Systems for the Real World: Key Insights from Imran Ahmad

AI is everywhere, but turning a machine learning prototype into a reliable, cost-effective product is hard work. Simply dropping a model into an existing system rarely works—success requires sound architecture and planning. Imran Ahmad has seen this firsthand as a data science leader and AI educator and shares practical advice on designing scalable, sustainable AI systems that work in the real world.

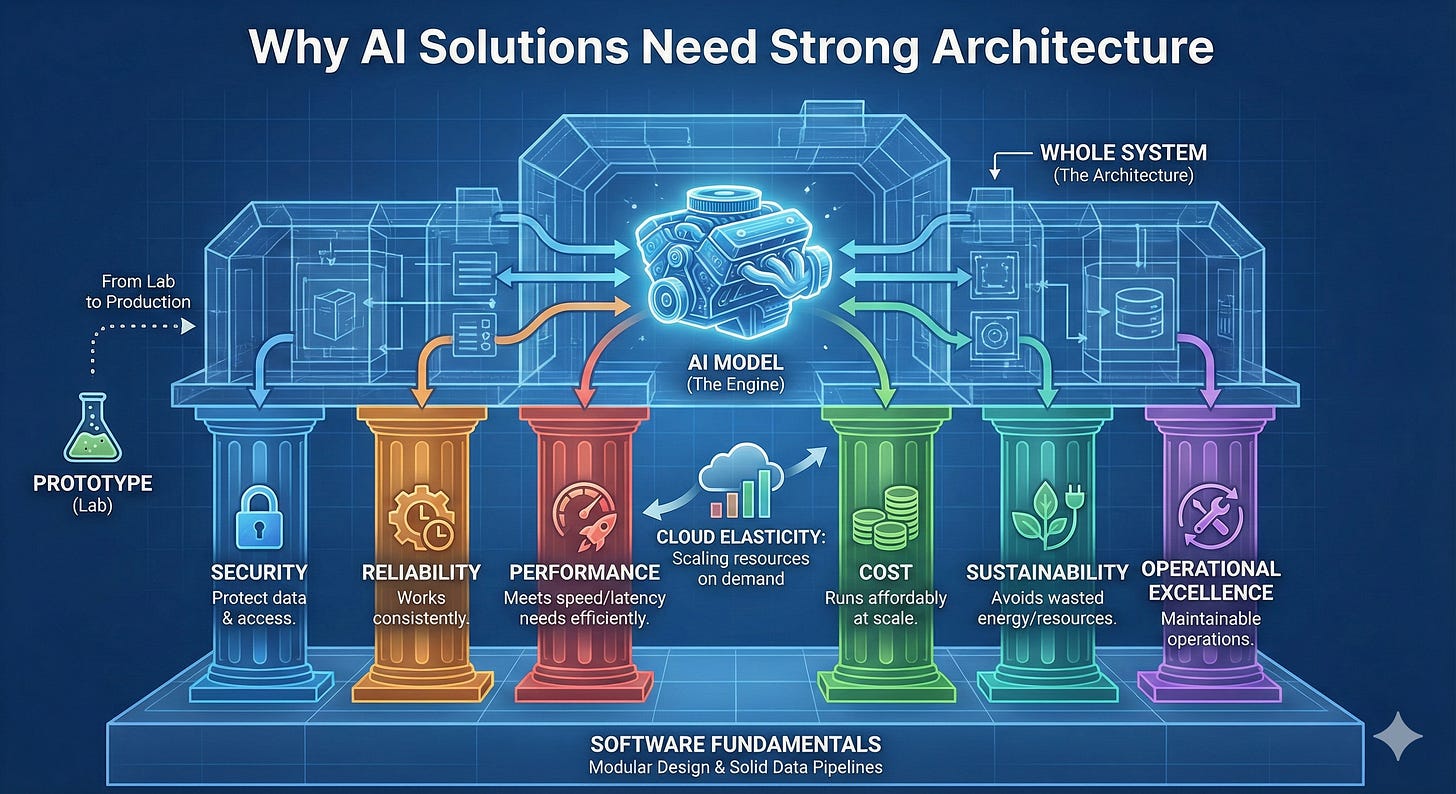

Why AI Solutions Need Strong Architecture

An AI product isn’t just a good model—it’s the whole system around it. To take a prototype from the lab to production, you must design for real-world conditions from the start. Ahmad suggests rating your architecture against 6 key pillars:

Security: Protect data and access.

Reliability: Works consistently.

Performance: Meets speed/latency needs efficiently.

Cost: Runs affordably at scale.

Sustainability: Avoids wasted energy/resources.

Operational Excellence: Maintainable operations.

The stronger your design is in these areas, the better its chances of thriving in production. Ahmad also stresses nailing software fundamentals (like modular design and solid data pipelines) before adding AI. For example, you can use cloud elasticity (scaling resources on demand) to achieve high performance without paying for idle servers.

From Concept to Design – Iterate and Evaluate

Designing an AI solution means choosing the right components (data pipelines, models, interfaces) for the job. Be prepared to iterate on this design. The first architecture you sketch might not be optimal. As Ahmad puts it, “Your first attempt at the design may not be the best one. You have to rethink; you have to evolve.” A good way to vet a design is to build a pilot (a scaled-down version) and see if it meets your speed, reliability, and cost goals. If not, adjust early before full deployment.

Another step is checking the project’s viability up front. Ahmad suggests asking: Cost – can we afford it at scale? Speed – will it make things faster? Accuracy – are the results better than the current method? You don’t need improvements in all three, but be clear about which benefit your AI delivers and ensure it outweighs any drawbacks.

Architecting for Scalability and Flexibility

AI systems often need heavy compute power, but avoid running expensive resources 24/7 if you don’t have to. One smart strategy is to decouple data and compute: keep data in durable storage, and spin up compute instances only when needed (for training jobs or batch processing), then shut them down. This yields high performance on demand while keeping costs and energy use in check.

Also, design differently for model training vs. inference. Training jobs can run in batch mode (e.g. overnight on cloud GPUs) and then be turned off. Serving live predictions might require always-on servers for low latency, but not every feature needs instant results. If a task can be done hourly or daily, use batch processing instead of real-time APIs and save resources.

Choosing cloud vs. on-premises deployment is another factor. It depends on data sensitivity, regulations, and legacy systems. If you move to cloud, make sure to redesign to use cloud features like auto-scaling—simply moving an on-prem design into cloud without changes can end up costing more. Aim for an architecture that scales and adapts efficiently as needs grow.

Avoid Pitfalls – Lock-In and Rigor

Beware of tying your solution to one provider’s tools. Vendor lock-in is risky if that provider fails or becomes too expensive. Use abstractions and standard frameworks so you can swap components easily. For example, use a library that supports multiple ML backends instead of coding directly to one vendor’s API, and containerize your application to keep it portable across clouds.

Another pitfall is weak engineering practices. Many AI projects lack tests, monitoring, or documentation. Treat an AI system like any critical software: write tests for data pipelines and model outputs, monitor performance, and plan for maintainability and security. Have cross-functional stakeholders agree on what a successful outcome looks like (covering both model metrics and system reliability/UX). This ensures the final system is robust from all angles.

User Experience Matters

No matter how powerful the AI, users will reject it if it’s hard to use or understand. Always design the AI solution as a simple service for the end user, hiding the complexity. For example, Google Maps runs complex algorithms for routing and traffic, but to the user it’s just “enter destination, get directions.” Likewise, make sure your AI’s outputs are presented in a clear, helpful way. Good UX builds trust and encourages adoption of the system.

Looking Ahead

The AI landscape continues to evolve. One major trend is multi-agent systems—multiple AI agents collaborating autonomously. This approach brings new capabilities and new challenges, and better methods for integrating enterprise knowledge into AI are emerging as well. For architects, such changes make continuous learning essential. The architect’s role is now a constant involvement—guiding the system through iterations. And while AI technology moves fast, core principles remain the anchor of success: keep systems secure, reliable, efficient, cost-effective, maintainable, and user-friendly.

🧠Expert Insight

The complete “Chapter 1: Fundamentals of AI System Architecture” from the book, Architecting AI Software Systems by Richard D Avila and Imran Ahmad

Fundamentals of AI System Architecture

·The recent surge of public interest in Artificial Intelligence (AI), particularly with the rise of generative AI, has ignited a wave of excitement and demand for comprehensive AI solutions. This heightened interest extends beyond tech enthusiasts and researchers to businesses, governments, and individuals seeking to harness AI’s power to solve real-worl…

The complete Deep Engineering interview with Imran Ahmad

Architecting AI Software Systems for the Real World: A Conversation with Imran Ahmad

·AI systems are now everywhere in software, but turning a promising model into a reliable, cost-effective, and sustainable product is still hard work. Teams are discovering that “just add a model” is not enough; you need end-to-end architecture that can take an idea from a lab-style proof of concept to a production system that meets real constraints arou…

🛠️Tool of the Week

KServe: Kubernetes-Native Model Serving for Scalable, Vendor-Agnostic AI Inference

KServe is an open-source, Kubernetes-native platform for deploying and operating predictive and generative AI models in production using a standard, cloud-agnostic inference API.

Highlights

Open-source and actively maintained: Apache-2.0 licensed CNCF project with ongoing development and an active community.

Proven in production: Adopted by organizations running real-world inference workloads across multiple model types and frameworks.

Built for scalable inference: Provides an

InferenceServiceabstraction, autoscaling (including scale-to-zero), GPU support, and support for common ML/LLM frameworks.Cloud-agnostic architecture: Runs on any Kubernetes cluster (on-prem or cloud), helping teams avoid hard coupling to a single vendor’s serving stack.

📎Tech Briefs

MCP’s November 2025 Spec: Long-Running AI Agents Meet Very Real Security Issues: Deep Engineering’s MCP analysis pairs the new 2025-11-25 Model Context Protocol spec—focused on long-running tasks and enterprise security—with fresh research on real-world MCP deployments that have already suffered exposed servers, critical vulnerabilities, and a 35-day cross-tenant data leak.

Fara-7B: On-device agentic model for computer use: Microsoft Research introduces Fara-7B, a 7B-parameter “computer use agent” that drives the UI directly (mouse, keyboard, scrolling) instead of just answering in text. It runs locally on Copilot+ PCs and via open weights (MIT license) on Foundry and Hugging Face, targeting real-world web tasks like form filling, booking, and information lookup.

vLLM-Omni: Omni-modal model serving for text, image, audio, and video: The vLLM team announces vLLM-Omni, an extension of vLLM for high-throughput omni-modality serving. It adds support for text, images, audio, and video, and goes beyond standard autoregressive LLMs to handle diffusion transformers and other parallel generation architectures.

How AI is transforming work at Anthropic: Anthropic publishes an internal study on how its own engineers use Claude and Claude Code: 132 engineers surveyed, 53 interviews, and usage data analysis. Engineers report using Claude in ~60% of their work with self-reported ~50% productivity gains and ~27% of Claude-assisted work representing tasks that simply wouldn’t have happened otherwise.

CrowdStrike Operationalizes and Secures Agentic AI Workloads on AWS: CrowdStrike becomes an inaugural AWS Agentic AI Specialization partner and describes how it is “operationalizing and securing agentic AI workloads on AWS.” The release outlines an “agentic SOC” (security operations center) where human analysts orchestrate fleets of security agents built on the CrowdStrike platform.

That’s all for today. Thank you for reading this issue of Deep Engineering. We’re just getting started, and your feedback will help shape what comes next. Do take a moment to fill out this short survey we run monthly—as a thank-you, we’ll add one Packt credit to your account, redeemable for any book of your choice.

We’ll be back next week with more expert-led content.

Stay awesome,

Divya Anne Selvaraj

Editor-in-Chief, Deep Engineering

If your company is interested in reaching an audience of developers, software engineers, and tech decision makers, you may want to advertise with us.

Brilliant breakdown of the architectural foundatinos needed for production AI systems. The six pillar framework you outlined makes it crystal clear that model performance alone isnt enough when operational costs, security vulnerabilities, and sustainability concerns can kill even the most accurate ML solution. What really resonates is the emphasis on iterating the design itself before committing to full deployment, something teams often skip in their rush to production. The decoupling of data and compute strategy is particularly smart for managing both cost and enviromental impact without sacrificing performance when you actually need it.