Deep Engineering #5: Dhirendra Sinha (Google) and Tejas Chopra (Netflix) on Scaling, AI Ops, and System Design Interviews

From designing fault-tolerant systems at Big Tech and hiring for system design roles, Chopra and Sinha share lessons on designing for failure, automation, and the importance of trade-off thinking.

Welcome to the fifth issue of Deep Engineering.

With AI workloads reshaping infrastructure demands and distributed systems becoming the default, engineers are facing new failure modes, stricter trade-offs, and rising expectations in both practice and hiring.

To explore what today’s engineers need to know, we spoke with Dhirendra Sinha (Software Engineering Manager at Google, and long-time distributed systems educator) and Tejas Chopra (Senior Engineer at Netflix and Adjunct Professor at UAT). Their recent book, System Design Guide for Software Professionals (Packt, 2024), distills decades of practical experience into a structured approach to design thinking.

In this issue, we unpack their hard-won lessons on observability, fault tolerance, automation, and interview performance—plus what it really means to design for scale in a world where even one-in-a-million edge cases are everyday events.

You can watch the full interview and read the transcript here—or keep reading for our take on the design mindset that will define the next decade of systems engineering.

Designing for Scale, Failure, and the Future — With Dhirendra Sinha and Tejas Chopra

“Foundational system design principles—like scalability, reliability, and efficiency—are remarkably timeless,” notes Chopra, adding that “the rise of AI only reinforces the importance of these principles.” In other words, new AI systems can’t compensate for poor architecture; they reveal its weaknesses. Sinha concurs: “If the foundation isn’t strong, the system will be brittle—no matter how much AI you throw at it.” AI and system design aren’t at odds – “they complement each other,” says Chopra, with AI introducing new opportunities and stress-tests for our designs.

One area where AI is elevating system design is in AI-driven operations (AIOps). Companies are increasingly using intelligent automation for tasks like predictive autoscaling, anomaly detection, and self-healing.

“There’s a growing demand for observability systems that can predict service outages, capacity issues, and performance degradation before they occur,” notes Sam Suthar, founding director of Middleware. AI-powered monitoring can catch patterns and bottlenecks ahead of failures, allowing teams to fix issues before users notice. At the same time, designing the systems to support AI workloads is a fresh challenge. The recent rollout of a Ghibli-style image generator saw explosive demand – so much that OpenAI’s CEO had to ask users to pause as GPU servers were overwhelmed. That architecture didn’t fully account for the parallelization and scale such AI models required. AI can optimize and automate a lot, but it will expose any gap in your system design fundamentals. As Sinha puts it, “AI is powerful, but it makes mastering the fundamentals of system design even more critical.”

Scaling Challenges and Resilience in Practice

So, what does it take to operate at web scale in 2025? Sinha highlights four key challenges facing large-scale systems today:

Scalability under unpredictable load: global services must handle sudden traffic spikes without falling over or grossly over-provisioning. Even the best capacity models can be off, and “unexpected traffic can still overwhelm systems,” Sinha says.

Balancing the classic trade-offs between consistency, performance, and availability: This remains as relevant as ever. In practice, engineers constantly juggle these – and must decide where strong consistency is a must versus where eventual consistency will do.

Security and privacy at scale have grown harder: Designing secure systems for millions of users, with evolving privacy regulations and threat landscapes, is an ongoing battle.

The rise of AI introduces “new uncertainties”: we’re still learning how to integrate AI agents and AI-driven features safely into large architectures.

Chopra offers an example from Netflix: “We once had a live-streaming event where we expected a certain number of users – but ended up with more than three times that number.” The system struggled not because it was fundamentally mis-designed, but due to hidden dependency assumptions. In a microservices world, “you don’t own all the parts—you depend on external systems. And if one of those breaks under load, the whole thing can fall apart,” Chopra warns. A minor supporting service that wasn’t scaled for 3× traffic can become the linchpin that brings down your application. This is why observability is paramount. At Netflix’s scale (hundreds of microservices handling asynchronous calls), tracing a user request through the maze is non-trivial. Teams invest heavily in telemetry to know “which service called what, when, and with what parameters” when things go wrong. Even so, “stitching together a timeline can still be very difficult” in a massive distributed system, especially with asynchronous workflows. Modern observability tools (distributed tracing, centralized logging, etc.) are essential, and even these are evolving with AI assistance to pinpoint issues faster.

So how do Big Tech companies approach scalability and robustness by design? One mantra is to design for failure. Assume everything will eventually fail and plan accordingly. “We operate with the mindset that everything will fail,” says Chopra. That philosophy birthed tools like Netflix’s Chaos Monkey, which randomly kills live instances to ensure the overall system can survive outages. If a service or an entire region goes down, your architecture should gracefully degrade or auto-heal without waking up an engineer at 2 AM. Sinha recalls an incident from his days at Yahoo:

“I remember someone saying, “This case is so rare, it’s not a big deal,” and the chief architect replied, “One in a million happens every hour here.” That’s what scale does—it invalidates your assumptions.”

In high-scale systems, even million-to-one chances occur regularly, so no corner case is truly negligible. In Big Tech, achieving resilience at scale has resulted in three best practices:

Fault-tolerant, horizontally scalable architectures: In Netflix and other companies, such architecture ensure that if one node or service dies, the load redistributes and the system heals itself quickly. Teams focus not just on launching features but “landing” them safely – meaning they consider how each new deployment behaves under real-world loads, failure modes, and even disaster scenarios. Automation is key: from continuous deployments to automated rollback and failover scripts. “We also focus on automating everything we can—not just deployments, but also alerts. And those alerts need to be actionable,” Sinha says.

Explicit capacity planning and graceful degradation: Engineers define clear limits for how much load a system can handle and build in back-pressure or shedding mechanisms beyond that. Systems often fail when someone makes unrealistic assumptions about unlimited capacity. Caching, rate limiting, and circuit breakers become your safety net. Gradual rollouts further boost robustness. “When we deploy something new, we don’t release it to the entire user base in one go,” Chopra explains. Whether it’s a new recommendation algorithm or a core infrastructure change, Netflix will enable it for a small percentage of users or in one region first, observe the impact, then incrementally expand if all looks good. This staged rollout limits the blast radius of unforeseen issues. Feature flags, canary releases, and region-by-region deployments should be standard operating procedure.

Infrastructure as Code (IaC): Modern infrastructure tooling also contributes to resiliency. Many organizations now treat infrastructure as code, defining their deployments and configurations in declarative scripts. As Sinha notes, “we rely heavily on infrastructure as code—using tools like Terraform and Kubernetes—where you define the desired state, and the system self-heals or evolves toward that.” By encoding the target state of the system, companies enable automated recovery; if something drifts or breaks, the platform will attempt to revert to the last good state without manual intervention. This codified approach also makes scaling and replication more predictable, since environments can be spun up from the same templates.

These same principles—resilience, clarity, and structured thinking—also underpin how engineers should approach system design interviews.

Mastering the System Design Interview

Cracking the system design interview is a priority for many mid-level engineers aiming for senior roles, and for good reason. Sinha points out that system design skill isn’t just a hiring gate – it often determines your level/title once you’re in a company. Unlike coding interviews where problems have a neat optimal solution, “system design is messy. You can take it in many directions, and that’s what makes it interesting,” Sinha says. Interviewers want to see how you navigate an open-ended problem, not whether you can memorize a textbook solution. Both Sinha and Chopra emphasize structured thinking and communication. Hiring managers deliberately ask ambiguous or underspecified questions to see if the candidate will impose structure: Do they ask clarifying questions? Do they break the problem into parts (data storage, workload patterns, failure scenarios, etc.)? Do they discuss trade-offs out loud? Sinha and Chopra offer two guidelines:

There’s rarely a single “correct” answer: What matters is reasoning and demonstrating that you can make sensible trade-offs under real-world constraints. “It’s easy to choose between good and bad solutions,” Sinha notes, “but senior engineers often have to choose between two good options. I want to hear their reasoning: Why did you choose this approach? What trade-offs did you consider?” A strong candidate will articulate why, say, they picked SQL over NoSQL for a given scenario – and acknowledge the downsides or conditions that might change that decision. In fact, Chopra may often follow up with “What if you had 10× more users? Would your choice change?” to test the adaptability of a candidate’s design. He also likes to probe on topics like consistency models: strong vs eventual consistency and the implications of the CAP theorem. Many engineers “don’t fully grasp how consistency, availability, and partition tolerance interact in real-world systems,” Chopra observes, so he presents scenarios to gauge depth of understanding.

Demonstrate a collaborative, inquisitive approach: A system design interview shouldn’t be a monologue; it’s a dialogue. Chopra says, “I try to keep the interview conversational. I want the candidate to challenge some of my assumptions.” For example, a candidate might ask: What are the core requirements? Are we optimizing for latency or throughput? or How many users are we targeting initially? — “that kind of questioning is exactly what happens in real projects,” Chopra explains. It shows the candidate isn’t just regurgitating a pre-learned architecture, but is actively scoping the problem like they would on the job. Strong candidates also prioritize requirements on the fly – distinguishing must-haves (e.g. high availability, security) from nice-to-haves (like an optional feature that can be deferred).

Through years of interviews, Sinha and Chopra have noticed three common pitfalls:

Jumping into solution-mode too fast: “Candidates don’t spend enough time right-sizing the problem,” says Chopra. “The first 5–10 minutes should be spent asking clarifying questions—what exactly are we designing, what are the constraints, what assumptions can we make?” Diving straight into drawing boxes and lines can lead you down the wrong path. Sinha agrees: “They hear something familiar, get excited, and dive into design mode—often without even confirming what they’re supposed to be designing. In both interviews and real life, that’s dangerous. You could end up solving the wrong problem.”

Lack of structure – jumping randomly between components without a clear plan: This scattered approach makes it hard to know if you’ve covered the key areas. Interviewers prefer a candidate who outlines a high-level approach (e.g. client > service > data layer) before zooming in, and who checks back on requirements periodically.

Poor time management: It’s common for candidates to get bogged down in details early (like debating the perfect database indexing scheme) and then run out of time to address other important parts of the system. Sinha and Chopra recommend practicing pacing yourself and be willing to defer some details. It’s better to have a complete, if imperfect, design than a perfect cache layer with no time to discuss security or analytics requirements. If an interviewer hints to move on or asks about an area you haven’t covered, take the cue. “Listen to the interviewer’s cues,” Sinha advises. “We want to help you succeed, but if you miss the hints, we can’t evaluate you properly.”

Tech interviews in general have gotten more demanding in 2025. The format of system design interviews hasn’t drastically changed, but the bar is higher. Companies are more selective, sometimes even “downleveling” strong candidates if they don’t perfectly meet the senior criteria. Evan King and Stefan Mai, cofounders of interview preparation startup, in an article in The Pragmatic Engineer observe, “performance that would have secured an offer in 2021 might not even clear the screening stage today”. This reflects a market where competition is fierce and expectations for system design prowess are rising. But as Chopra and Sinha illustrate, the goal is not to memorize solutions – it’s to master the art of trade-offs and critical thinking.

Beyond Interviews: System Design as a Career Catalyst

System design isn’t just an interview checkbox – it’s a fundamental skill for career growth in engineering. “A lot of people revisit system design only when they're preparing for interviews,” Sinha says. “But having a strong grasp of system design concepts pays off in many areas of your career.” It becomes evident when you’re vying for a promotion, writing an architecture document, or debating a new feature in a design review.

Engineers with solid design fundamentals tend to ask the sharp questions that others miss (e.g. What happens if this service goes down? or Can our database handle 10x writes?). They can evaluate new technologies or frameworks in the context of system impact, not just code syntax. Technical leadership roles especially demand this big-picture thinking. In fact, many companies now expect even engineering managers to stay hands-on with architecture – “system design skills are becoming non-negotiable” for leadership.

Mastering system design also improves your technical communication. As you grow more senior, your success depends on how well you can simplify complexity for others – whether in documentation or in meetings. “It’s not just about coding—it’s about presenting your ideas clearly and convincingly. That’s a huge part of leadership in engineering,” Sinha notes. Chopra agrees, framing system design knowledge as almost a mindset: “System design is almost a way of life for senior engineers. It’s how you continue to provide value to your team and organization.” He compares it to learning math: you might not explicitly use the quadratic formula daily, but learning it trains your brain in problem-solving.

Perhaps the most exciting aspect is that the future is wide open. “Many of the systems we’ll be working on in the next 10–20 years haven’t even been built yet,” Chopra points out. We’re at an inflection point with technologies like AI agents and real-time data streaming pushing boundaries; those with a solid foundation in distributed systems will be the “go-to” people to harness these advances. And as Chopra notes,

“seniority isn’t about writing complex code. It’s about simplifying complex systems and communicating them clearly. That’s what separates great engineers from the rest.”

System design proficiency is a big part of developing that ability to cut through complexity.

Emerging Trends and Next Frontiers in System Design

While core principles remain steady, the ecosystem around system design is evolving rapidly. We can identify three significant trends:

Integration of AI Agents with Software Systems: As Gavin Bintz writes in Agent One, an emerging trend is the integration of AI agents with everyday software systems. New standards like Anthropic’s Model Context Protocol (MCP), are making it easier for AI models to securely interface with external tools and services. You can think of MCP as a sort of “universal adapter” that lets a large language model safely query your database, call an API like Stripe, or post a message to Slack – all through a standardized interface. This development opens doors to more powerful, context-aware AI assistants, but it also raises architectural challenges. Designing a system that grants an AI agent limited, controlled access to critical services requires careful thought around authorization, sandboxing, and observability (e.g., tracking what the AI is doing). Chopra sees MCP as fertile ground for new system design patterns and best practices in the coming years.

Deepening of observability and automation in system management: Imagine systems that not only detect an anomaly but also pinpoint the likely root cause across your microservices and possibly initiate a fix. As Sam Suthar, Founding Director at Middleware, observes, early steps in this direction are already in play – for example, tools that correlate logs, metrics, and traces across a distributed stack and use machine learning to identify the culprit when something goes wrong. The ultimate goal is to dramatically cut Mean Time to Recovery (MTTR) when incidents occur, using AI to assist engineers in troubleshooting. As one case study showed, a company using AI-based observability was able to resolve infrastructure issues 75% faster while cutting monitoring costs by 75%. The complexity of modern cloud environments is pushing us toward this new normal of predictive, adaptive systems.

Sustainable software architecture: There is growing dialogue now about designing systems that are not only robust and scalable, but also efficient in their use of energy and resources. The surge in generative AI has shone a spotlight on the massive power consumption of large-scale services. According to Kemene et al., in an article published by the World Economic Forum (WEF), Data centers powering AI workloads can consume as much electricity as a small city; the International Energy Agency projects data center energy use will more than double by 2030, with AI being “the most important driver” of that growth. Green software engineering principles urge us to consider the carbon footprint of our design choices. Sinha suggests this as an area to pay attention to.

Despite faster cycles, sharper constraints and more automation system design remains grounded in principles. As Chopra and Sinha make clear, the ability to reason about failure, scale, and trade-offs isn’t just how systems stay up; it’s also how engineers move up in their career.

If you found Sinha and Chopra’s perspective on designing for scale and failure compelling, their book System Design Guide for Software Professionals unpacks the core attributes that shape resilient distributed systems. The following excerpt from Chapter 2 breaks down how consistency, availability, partition tolerance, and other critical properties interact in real-world architectures. You’ll see how design choices around reads, writes, and replication influence system behavior—and why understanding these trade-offs is essential for building scalable, fault-tolerant infrastructure.

Expert Insight: Distributed System Attributes by Dhirendra Sinha and Tejas Chopra

The complete “Chapter 2: Distributed System Attributes” from the book System Design Guide for Software Professionals by Dhirendra Sinha and Tejas Chopra (Packt, August 2024)

…

Before we jump into the different attributes of a distributed system, let’s set some context in terms of how reads and writes happen.

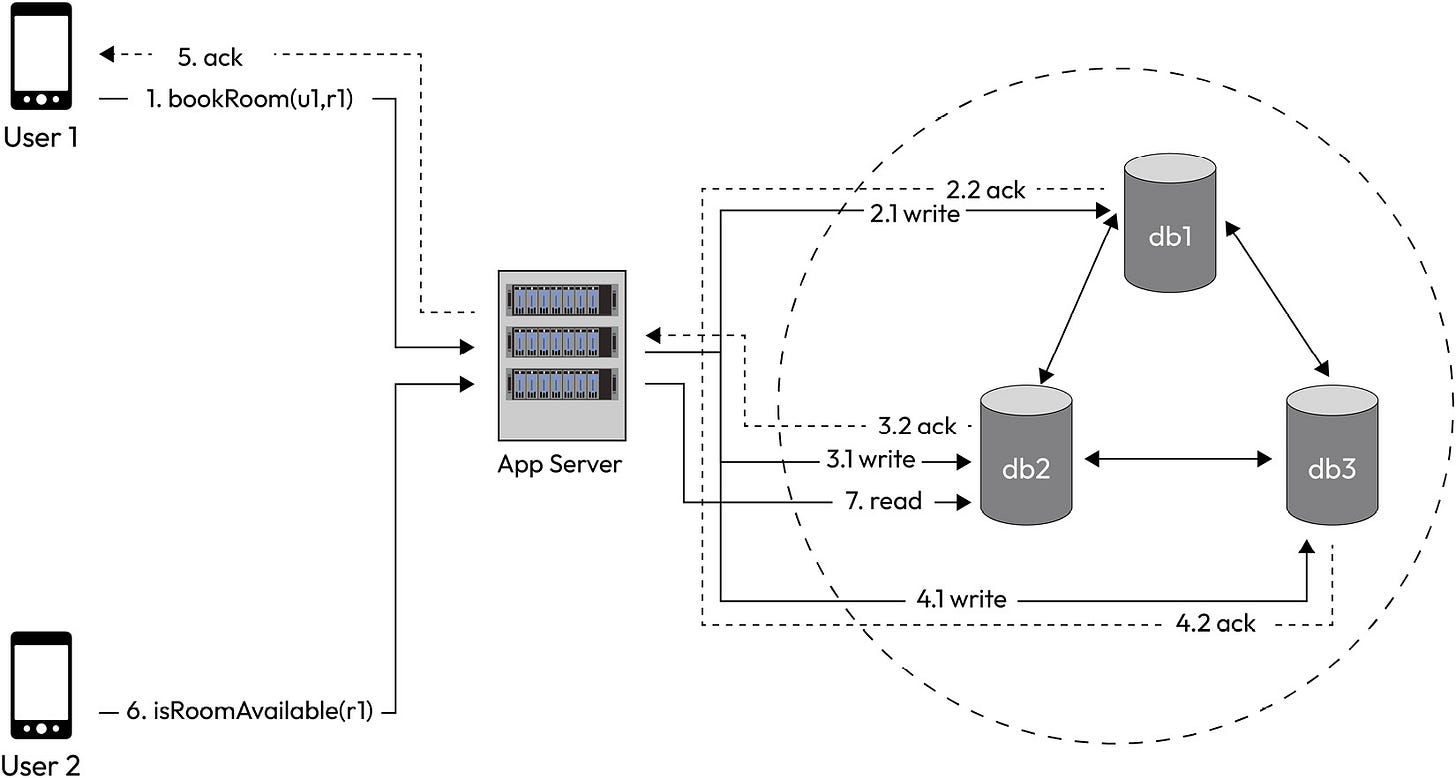

Let’s consider an example of a hotel room booking application (Figure 2.1). A high-level design diagram helps us understand how writes and reads happen:

Figure 2.1 – Hotel room booking request flow

As shown in Figure 2.1, a user (u1) is booking a room (r1) in a hotel and another user is trying to see the availability of the same room (r1) in that hotel. Let’s say we have three replicas of the reservations database (db1, db2, and db3). There can be two ways the writes get replicated to the other replicas: The app server itself writes to all replicas or the database has replication support and the writes get replicated without explicit writes by the app server.

Let’s look at the write and the read flows:

System Design Guide for Software Professionals by Dhirendra Sinha and Tejas Chopra (Packt, August 2024) is a comprehensive, interview-ready manual for designing scalable systems in real-world settings. Drawing on their experience at Google, Netflix, and Yahoo, the authors combine foundational theory with production-tested practices—from distributed systems principles to high-stakes system design interviews.

Through case studies of real systems like Instagram, Uber, and Google Docs, this book covers core components such as DNS, databases, caching layers, queues, and APIs. It also walks you through critical theorems, trade-offs, and design attributes—helping you think clearly about scale, resilience, and performance under modern constraints.

For a limited time, get the eBook for $9.99 at packtpub.com — no code required.

🛠️ Tool of the Week

Diagrams 0.24.4 — Architecture Diagrams as Code, for System Designers

Diagrams is an open source Python toolkit that lets developers define cloud architecture diagrams using code. Designed for rapid prototyping and documentation, it supports major cloud providers (AWS, GCP, Azure), Kubernetes, on-prem infrastructure, SaaS services, and common programming frameworks—making it ideal for reasoning about modern system design.

The latest release (v0.24.4, March 2025) adds stability improvements and ensures compatibility with recent Python versions. Diagrams has been adopted in production projects like Apache Airflow and Cloudiscovery, where infrastructure visuals need to be accurate, automatable, and version controlled.

Highlights:

Diagram-as-Code: Define architecture models using simple Python scripts—ideal for automation, reproducibility, and tracking in Git.

Broad Provider Support: Over a dozen categories including cloud platforms, databases, messaging systems, DevOps tools, and generic components.

Built on Graphviz: Integrates with Graphviz to render high-quality, publishable diagrams.

Extensible and Scriptable: Easily integrate with build pipelines or architecture reviews without relying on external design tools.

📰 Tech Briefs

Analyzing Metastable Failures in Distributed Systems: A new HotOS'25 paper builds on prior work to introduce a simulation-based pipeline—spanning Markov models, discrete event simulation, and emulation—to help engineers proactively identify and mitigate metastable failure modes in distributed systems before they escalate.

A Senior Engineer's Guide to the System Design Interview: A comprehensive, senior-level guide to system design interviews that demystifies core concepts, breaks down real-world examples, and equips engineers with a flexible, conversational framework for tackling open-ended design problems with confidence.

Using Traffic Mirroring to Debug and Test Microservices in Production-Like Environments: Explores how production traffic mirroring—using tools like Istio, AWS VPC Traffic Mirroring, and eBPF—can help engineers safely debug, test, and profile microservices under real-world conditions without impacting users.

Designing Instagram: This comprehensive system design breakdown of Instagram outlines the architecture, APIs, storage, and scalability strategies required to support core features like media uploads, feed generation, social interactions, and search—emphasizing reliability, availability, and performance at massive scale.

Chiplets and the Future of System Design: A forward-looking piece on how chiplets are reshaping the assumptions behind system architecture—covering yield, performance, reuse, and the growing need for interconnect standards and packaging-aware system design.

That’s all for today. Thank you for reading the first issue of Deep Engineering. We’re just getting started, and your feedback will help shape what comes next.

Take a moment to fill out this short survey we run monthly—as a thank-you, we’ll add one Packt credit to your account, redeemable for any book of your choice.

We’ll be back next week with more expert-led content.

Stay awesome,

Divya Anne Selvaraj

Editor-in-Chief, Deep Engineering