Deep Engineering #12: Tony Dunsworth on AI for Public Safety and Critical Systems

From quantization to synthetic data, how to build AI that’s fast, private, and resilient under pressure.

Welcome to the twelfth issue of Deep Engineering

“The challenge isn’t how to train the biggest model—it’s how to make a small one reliable.”

That’s how Tony Dunsworth sums up his work building AI infrastructure for 911 emergency systems. In public safety, failure can have devastating effects with lives at stake. You’re also working with limited compute, strict privacy mandates, and call centers staffed by only two to five people at a time. There’s no budget for a proprietary AI stack. And there’s no tolerance for downtime.

Dunsworth holds a Ph.D. in data science, with a dissertation focused on forecasting models for public safety answering points. For over 15 years, he’s worked across the full data lifecycle—from backend engineering to analytics and deployment—in some of the most sensitive domains in government. Today, he leads AI and data efforts for the City of Alexandria, where he’s building secure, on-prem AI systems that help triage calls, reduce response time, and improve operational resilience.

To understand what it takes to design AI systems that are cost-effective, maintainable, and safe to use in high-stakes environments, we spoke with Dunsworth about his use of synthetic data, model quantization, open-weight LLMs, and risk validation under operational load.

You can watch the complete interview and read the transcript here or scroll down for our synthesis of what it takes to build mission-ready AI with small teams, tight constraints, and hardly any margin for error.

Building Emergency-Ready AI: Scaling Down to Meet Constraints —with Tony Dunsworth

How engineers in critical systems can design reliable, resource-efficient AI to meet hard limits on privacy, compute, and risk.

AI adoption in the public sector is accelerating but slowly. A June 2025 EY survey of government executives found 64% see AI’s cost-saving potential and 63% expect improved services, yet only 26% have integrated AI across their organizations. The appetite is there, but so are steep barriers. 62% cited data privacy and security concerns as a major hurdle – the top issue – along with lack of a clear data strategy, inadequate infrastructure and skills, unclear ROI, and funding shortfalls. Public agencies face tight budgets, limited tech staff, legacy hardware, and strict privacy mandates, all under an expectation of near-100% uptime for critical services.

Public safety systems epitomize these constraints. Emergency dispatch centers can’t ship voice transcripts or medical data off to a cloud API that might violate privacy or go down mid-call. They also can’t afford fleets of cutting-edge GPUs; many 9-1-1 centers run on commodity servers or even ruggedized edge devices. AI solutions here must fit into existing, resource-constrained environments. For engineers building AI systems in production, scale isn't always the hard part—constraints are.

By treating public safety as a high-constraint exemplar, we can derive patterns applicable to other domains like healthcare (with HIPAA privacy and limited hospital IT), fintech (with heavy regulation and risk controls), logistics (where AI might run on distributed edge devices), embedded systems (tiny hardware, real-time needs), and regulated enterprises (compliance and uptime demands). In all such cases, “bigger” AI is not necessarily better – adaptability, efficiency, and trustworthiness determine adoption.

Leaner Models for Mission-Critical Systems

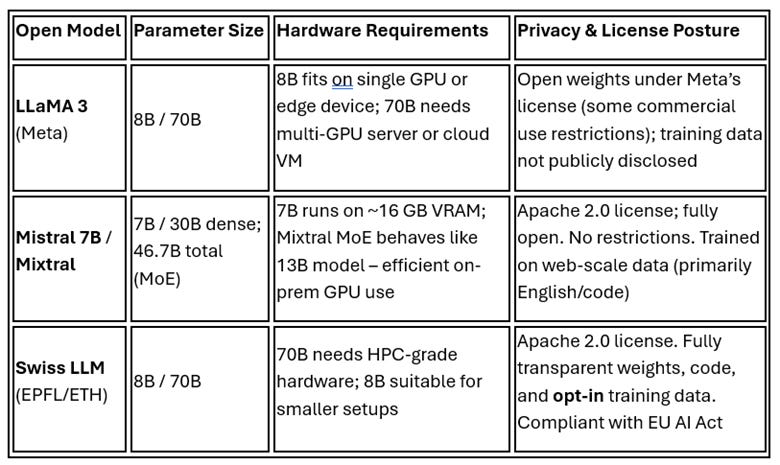

Open models come with transparent weights and permissive licenses that allow self-hosting and fine-tuning, which is crucial when data cannot leave your premises. In 2025, several open large language models (LLMs) have emerged that combine strong capabilities with manageable size:

Meta LLaMA 3: Released in 2025, with 8B and 70B parameter versions. LLaMA 3 offers state-of-the-art performance on many tasks and improved reasoning, and Meta touts it as “the best open source models of their class”. However, its license restricts certain commercial uses and the training data is not fully disclosed. In practice, the 70B model is powerful but demanding to run, while the 8B version is much more lightweight.

Mistral 7B / Mixtral: The French startup Mistral AI has focused on efficiency. Mistral 7B (a 7-billion-parameter model) punches above its weight, often outperforming larger 13B models, especially on English and code tasks. They also introduced Mixtral 8×7B, a sparse Mixture-of-Experts model with 46.7B total parameters where only ~13B are active per token. This clever design means “Mixtral outperforms Llama 2 70B on most benchmarks with 6× faster inference” while maintaining a permissive Apache 2.0 open license. It matches or beats GPT-3.5-level performance at a fraction of the runtime cost. Mixtral’s trick of not using all parameters at once lets a smaller server handle a model that behaves like a much larger one.

Swiss “open-weight” LLM: This is a new 70B-parameter model developed by a coalition of academic institutions (EPFL/ETH Zurich) on the public Alps supercomputer. The Swiss LLM is fully open: weights, code, and training dataset are released for transparency. Its focus is on multilingual support (trained on data in 1,500+ languages) and sovereignty – no dependency on Big Tech or hidden data. Licensed under Apache 2.0, it represents the “full trifecta: openness, multilingualism, and sovereign infrastructure,” designed explicitly for high-trust public sector applications. Importantly, the Swiss model was developed to comply with EU AI Act and Swiss privacy laws from the ground up.

Other open models like Falcon 180B (UAE’s giant model) or BLOOM 176B (the BigScience community model) exist, but their sheer size makes them less practical in constrained settings. The models above strike a better balance. Table 1 compares these representative options by size, hardware needs, and privacy posture:

Table 1: Open-source LLMs suited for constrained deployments, compared by size, infrastructure needs, and privacy considerations.

Choosing an open model allows agencies to avoid vendor lock-in and meet governance requirements. By fine-tuning these models in-house on domain-specific data, teams can achieve high accuracy without sending any data to third-party services. However, open models do come with trade-offs.

The biggest of these Dunsworth says:

“ is understanding that the speed is going to be a lot slower. Even with my lab having 24 gigs of RAM, or my office lab having 32 gigs of RAM, they are still noticeably slower than if I'm using an off-site LLM to do similar tasks. So, you have to model your trade-off, because I have to also look at what kind of data I'm using—so that I'm not putting protected health information or criminal justice information out into an area where it doesn't belong and where it could be used for other purposes. So, the on-premises local models are more appealing for me because I can do more with them—I don't have the same concern about the data going out of the networks.”

That’s where techniques like Quantization and altering the model architecture come in – effectively scaling down the model to meet your hardware where it is:

Quantization: Dunsworth defines quantization as follows:

“Quantizing is a way to optimize an LLM by making it work more efficiently with fewer resources—less memory consumption. It doesn’t leverage all of the parameters at once, so it’s able to package things a little bit better. It packages your requests and the tokens a little more efficiently so that the model can work a little faster and return your responses—or return your data—a little quicker to you, so that you can be more interactive with it.”

By reducing model weight precision (e.g. from 16-bit to 4-bit), quantization can shrink memory footprint dramatically and speed up inference with minimal impact on accuracy. For example, a 70B model quantized to 4-bit effectively behaves like a ~17B model in memory terms, often retaining ~95% of its performance. Combined with efficient runtimes (like Meta’s GGML for CPU and GPU kernels optimized for int4/int8 arithmetic), quantization lets even a single GPU PC host models that previously needed a whole cluster.

Altering the model architecture for efficiency: The Mixture-of-Experts (MoE) design in Mixtral increases parameter count (for capacity) but only activates a subset of experts per token, so you don’t pay the full compute cost every time. This architecture is a natural fit when you need bursts of capability without constant heavy throughput – much like emergency systems that must handle occasional complex queries quickly, but don’t see GPT-scale volumes continuously. The result: big-model performance on small-model infrastructure.

Dunsworth’s field lab architecture offers a practical view into how these techniques are actually used. “I’ve been doing more of the work with lightweight or smaller LLM models because they’re easier to get ramped up with,” he says—emphasizing that local deployments reduce risk of data exposure while enabling fast iteration. But even with decent hardware (24–32 GB RAM), resource contention remains a bottleneck:

“The biggest challenge is resource base… I’m pushing the model hard for something, and at the same time I’m pushing the resources… very hard—it gets frustrating.”

That frustration led him to explore quantization hands-on, particularly for inference responsiveness. “I’ve got to make my work more responsive to my users—or it’s not worth it.” Quantization, local hosting, and iterative fine-tuning become less about efficiency for its own sake, and more about achieving practical performance under constraints—especially when “inexpensive” also has to mean maintainable.

In practice, deploying a lean model in a mission-critical setting also demands robust inference software. Projects like vLLM have emerged to maximize throughput on a given GPU by intelligently batching and streaming requests. vLLM’s architecture can yield 24× higher throughput than naive implementations by scheduling token generation across multiple requests in parallel.

Synthetic Data Pipelines: Fidelity with Privacy

Data is the fuel for AI models, but in public safety and healthcare, real data is often sensitive or scarce. This is where synthetic data pipelines have become game-changers, allowing teams to generate realistic, statistically faithful data that mimics real-world patterns without exposing real personal information. By using generative models or simulations to create synthetic call logs, incident reports, sensor readings, etc., engineers can vastly expand their training and testing datasets while staying privacy-compliant.

Dunsworth, who builds AI infrastructure for emergency services, describes this approach. Rather than rely on real 911 call logs, Dunsworth reconstructs patterns from operational data to generate synthetic equivalents. “I take it apart and find the things I need to see in it… so when I make that dataset, it reflects those ratios properly,” he explains. This includes recreating distributions across service types—for e.g. police, fire, medical—and reproducing key statistical features like call arrival intervals, elapsed event times, or geospatial distribution.

“For me, it’s a lot of statistical recreation… I can feed that into an AI model and say, ‘OK, I need you to examine this.’”

Dunsworth’s pipeline is entirely Python-based and open source. He uses local LLMs to iteratively refine the generated datasets: “I build a lot of it, and then I pass it off to my local models to refine what I’m working on.” That includes teaching the model to correct for misleading assumptions—such as when synthetic time intervals defaulted to normal distributions, even though real data followed Poisson or gamma curves. He writes scripts to analyze and feed the correct distributions back into generation:

“Then it tells me, ‘Here’s the distribution, here are its details.’ And I feed that back into the model and say, ‘OK, make sure you’re using this distribution with these parameters.’”

From Data Scarcity to Scalable Testing

The shift to synthetic pipelines can solve multiple problems at once: data scarcity, privacy compliance, and edge-case testing. For training, synthetic records make it easy to balance class frequency—whether you’re modeling rare floods or unusual fraud patterns. For validation, they offer controlled stress tests that historical logs simply can’t provide.

“I use it in testing my analytics models… then I can have my model do the same thing. I make sure that they match.”

Unlike real-world events, synthetic scenarios can be manufactured to simulate extreme or simultaneous failures—testing the AI under precise conditions.

Adoption Grows with Education and Precision

Early adoption wasn’t smooth, Dunsworth says. “The biggest hurdle was pushback from peers at first,” he noted. But that changed as datasets improved in realism, and the utility of using synthetic data for demos, teaching, or sandbox testing became obvious.

“Now people are more interested… I keep it under an open-source license. Just give me the improvements back—that’s the last rule.”

A crucial distinction is that synthetic ≠ anonymized. Rather than redact real identities, Dunsworth starts from a clean slate, using only statistical patterns from real data as seed material. He avoids copying event narratives and even manually inspects Faker-generated names to ensure no accidental leakage:

“I don’t reproduce narratives… I go through my own list of people I know to make sure that name doesn’t show up.”

He also aligns his work with formal ethical frameworks.

“I was very fortunate throughout my education—through my software engineering courses, my analytics and data science courses at university—that ethics was stressed as one of the most important things we needed to focus on alongside practice. So, I have very solid ethical programming training.”

Dunsworth also reviews frameworks like the NIST AI RMF to maintain development guardrails.

These practices map directly onto any domain where real data is hard to access—medical records, financial logs, customer transcripts, or operational telemetry. The principles are universal:

Reconstruct statistical structure from clean seeds

Validate outputs against known metrics

Stress test systematically, not opportunistically

Never copy real content—synthesize structure, not substance

Build ethical discipline into your generation workflow

For teams building AI tools without access to real production data, this is a practical playbook. You don’t need a GPU farm or proprietary toolchain. You need controlled pipelines, structured validation, and a robust sense of responsibility. As Dunsworth says:

“I feel confident that the people I work with… are all operating from the same place: protecting as much information as we can… making sure we're not exposing anything that we can’t.”

Stress Before Success: Risk Management and Resilience Engineering

Building AI systems for constrained environments isn’t only about latency, memory, or cost. It’s also about failure and how to survive it.

Dunsworth’s work in emergency response illustrates the stakes clearly, but his framework for risk mitigation is widely transferable: define the use case tightly, control where the data flows, and validate under load—not just in ideal cases.

“One of the biggest risk mitigations is starting out from the beginning—knowing what you want to use AI for and how you define how it’s working well.”

Instead of treating vendor-provided models as turnkey solutions, Dunsworth interrogates the entire data path—from ingestion through inference to retention. That includes third-party dependencies:

“What data am I feeding, and how do I work with that vendor to make sure the data is being used the way I intend?” In sensitive environments, he keeps training in-house: “That way… it doesn't leave my organization.”

Success is measured operationally:

“If you're using it(AI) just to say, ‘Well, we're using AI,’ I'm going to be the first one to raise my hand and say, ‘Stop.’” Instead, AI is validated through concrete outcomes: “It’s enabled our QA manager to process more calls… improving our ability to service our community.”

Stress It Twice, Then Ship

For AI systems that might break under pressure, Dunsworth prescribes a straightforward and brutal regimen:

“Get synthetic data together to test it (the model)—and then just, in the middle of your testing lab, hit it all at once. Hit it with everything you've got, all at the same time.”

Only if the system remains responsive under full overload does it move forward. “If it continues to perform well, then you have some confidence… it’s still going to be reliable enough for you to continue to operate.”

Failure is expected but it must be observable and recoverable.

“Even if it breaks… we know it can still recover and come back to service quickly.”

One real-world implementation of this mindset is LogiDebrief, a QA automation system deployed in the Metro Nashville Department of Emergency Communications. Developed to audit 9-1-1 calls in real time, LogiDebrief formalizes emergency protocol as logical rules and then uses an LLM to interpret unstructured audio transcripts, match them against those rules, and flag any deviations. As Chen et al. explain: “The framework formalizes call-taking requirements as logical specifications, enabling systematic assessment of 9-1-1 calls against procedural guidelines”.

In practice, it executes a three-stage pipeline:

Context extraction (incident type, responder actions),

Formal rule evaluation using Signal-Temporal Logic,

Deviation reporting for any missed steps.

This enables automated QA for both AI and human decisions—a form of embedded auditing that surfaces failure as it happens. In deployment, LogiDebrief reviewed 1,701 calls and saved over 311 hours of manual evaluator time. More importantly, when something procedural is missed—like a mandatory question for a specific incident type—it gets flagged and can be corrected in downstream training, improving both model performance and human compliance.

From Monoliths to Micro-Solutions

When one early AI analytics platform failed under edge-case data—“it just said, ‘I got nothing’”—Dunsworth scrapped the codebase entirely. Why? The workflow made sense to him, but not to his users.

“I assumed I could develop an analytics flow that would work for everybody… it worked well for me, but it didn’t work well for my target audience.”

This led to a major design shift. Instead of building one global solution, he pivoted to “micro-solutions that will do different things inside the same framework.” This insight should be familiar to any engineer who’s seen a service fail not because it was wrong, but because no one could use it.

“If they’re not going to use it—it doesn’t work.”

Anticipating the Next Frontiers

Looking forward, Dunsworth is focused on redirecting complexity, not increasing it. One focus area: offloading non-emergency calls using AI assistants. “It really is a community win-win, because now we can get those services out faster.”

Another: multilingual responsiveness. In cities where services span four or more languages, Dunsworth sees multilingual AI as a matter of equity and latency:

“If we can improve the quality and speed of translation… (that can save a life.)”

Takeaways for Engineers Weighing AI Adoption in Critical Systems

Let us now summarize key risk mitigation strategies – from technical safeguards to policy measures – that can enable organizations to confidently adopt AI in sensitive environments:

Model Compression (Quantization & Pruning): We’ve discussed quantization as a way to make models smaller and faster. This not only enables using cheaper hardware, but also reduces power consumption (important for e.g. mobile or field deployments) and even attack surface (smaller models are slightly easier to analyze for vulnerabilities). Pruning (removing redundant weights) is another technique to shrink models. The overall effect is a lean model less likely to overload your systems.

Encryption and Secure Execution: In high-trust domains, data encryption is mandatory not just at rest but in transit – and increasingly during computation. Self-hosting an LLM doesn’t automatically guarantee security; teams must ensure all connections are encrypted (HTTPS/TLS) so that input/output data can’t be intercepted. Tools like Caddy (a web server with automatic TLS) are often used as front-ends to internal AI APIs to enforce this. Moreover, techniques like homomorphic encryption and secure enclaves (Intel SGX, etc.) are emerging so that even if someone got a hold of the model runtime, they couldn’t extract sensitive data. While these techniques can be expensive computationally, they’re improving.

Robust Vendor Governance: If using any third-party models or services, public sector teams impose strict governance – similar to vetting a physical supplier. Open-source models don’t come from a vendor per se, but they still warrant a security review (has the model or its code been audited? is there a risk of embedded trojans?). It is also important to focus on what vendors bring: requiring transparency about model training data (to avoid hidden biases or privacy violations), demanding uptime SLAs if it’s a cloud API, and ensuring models meet regulatory standards.

In-House Fine-Tuning & Monitoring: Rather than rely on a vendor’s generic model, high-constraint deployments should favor owning the last-mile training of the model. By fine-tuning open models on local data, organizations not only boost performance for their specific tasks, they also retain full control of the model’s behavior. This makes it easier to mitigate bias or inappropriate behavior – if the model says something it shouldn’t, you can adjust the training data or add safety filters and retrain. Continuous monitoring is part of this loop: logs of the AI’s outputs should be reviewed (often with tools like LogiDebrief or simple dashboards) to catch any drift or errors. Essentially, the AI should be treated as a critical piece of infrastructure that gets constant telemetry and maintenance, not a “set and forget” software. This reduces the risk of unseen failure modes accumulating over time.

Fallback and Redundancy: Finally, a practical strategy – always have a Plan B. In emergency systems, if the AI fails or is uncertain, it should gracefully hand off to a human or a simpler rule-based system. While this isn’t unique to AI (classic high-availability design), it’s worth noting that large AI models can fail in novel ways (e.g. getting stuck in a hallucination loop). Having a watchdog process that can kill and restart an AI service if it behaves oddly is a form of automated risk mitigation too.

Each of these strategies – from squeezing models to encrypting everything, from vetting vendors to fine-tuning internally – contributes to an overall posture of trust through transparency and control. They turn the unpredictable black box into something that engineers and auditors can reason about and rely on. Dunsworth repeatedly comes back to the theme of discipline in engineering choices. Public safety and other critical systems can’t afford guesswork or move fast and break things. By enforcing these risk mitigations, engineers can build systems that move fast and not break things beyond rapid recovery.

🛠️ Tool of the Week

BlueSky Statistics – A GUI-Driven Analytics Platform for R Users

BlueSky Statistics is a desktop-based, open-source analytics platform designed to make R more accessible to non-programmers—offering point-and-click simplicity without sacrificing statistical power. It supports data management, traditional and modern machine learning, advanced statistics, and quality engineering workflows, all through a rich graphical interface.

Highlights:

Drag-and-Drop Data Science for R: BlueSky lets users load, browse, edit, and analyze datasets through interactive data grids—no scripting required. Variables can be recoded, reformatted, and visualized in a few clicks.

Modeling, Machine Learning & Deep Learning: BlueSky supports over 50 modeling algorithms, including decision trees, SVMs, KNN, logistic regression, and ANN/CNN/RNN.

Full Statistical Suite + DoE + Survival Analysis: The platform includes descriptive and inferential statistics, survival models (Kaplan-Meier, Cox), and advanced modules for longitudinal analysis and power studies.

Quality, Process, and Six Sigma Tools: Tailored for manufacturing and process improvement, BlueSky integrates tools aligned with the DMAIC cycle: Pareto and fishbone diagrams, SPC control charts, Gage R&R, process capability analysis, and equivalence testing.

Integrated R IDE for Programmers: For technical users, BlueSky offers a built-in R IDE to write, import, execute, and debug R scripts—bridging GUI simplicity with code-based extensibility.

📎Tech Briefs

A New Perspective On AI Safety Through Control Theory Methodologies | Ullrich et al. | IEEE: Proposes a novel approach to AI safety using principles from control theory—specifically “data control”—to provide a top-down, system-theoretic framework for analyzing and assuring the safety of AI systems in real-world, safety-critical environments.

Can We Make Machine Learning Safe for Safety-Critical Systems? | Dr. Thomas G. Dietterich | Distinguished Professor Emeritus Oregon State University: Outlines a comprehensive framework for integrating machine learning into safety-critical systems by combining risk-driven data collection, formal verification, and continuous anomaly and near-miss detection.

AI Safety vs. AI Security: Demystifying the Distinction and Boundaries | Lin et al. | The Ohio State University: Establishes clear conceptual and technical boundaries between AI Safety (unintentional harm prevention) and AI Security (defense against intentional threats), arguing that precise definitions are essential for effective research, governance, and trustworthy system design—especially as misuse increasingly straddles both domains.

Making certifiable AI a reality for critical systems: SAFEXPLAIN core demo | Barcelona Supercomputing Center (BSC): Introduces the SafeExplain platform which offers a structured safety lifecycle and modular architecture for AI-based cyber-physical systems, integrating explainable AI, functional safety patterns, and runtime monitoring.

That’s all for today. Thank you for reading this issue of Deep Engineering. We’re just getting started, and your feedback will help shape what comes next. Do take a moment to fill out this short survey we run monthly—as a thank-you, we’ll add one Packt credit to your account, redeemable for any book of your choice.

We’ll be back next week with more expert-led content.

Stay awesome,

Divya Anne Selvaraj

Editor-in-Chief, Deep Engineering

If your company is interested in reaching an audience of developers, software engineers, and tech decision makers, you may want to advertise with us.