Building with Mojo (Part 1): A Language Born for AI and Systems

An introduction to Mojo’s origins, design goals, and its promise to unify high-performance and Pythonic development.

This article, kicks off a multi-part series exploring Mojo, a new programming language developed by the AI company Modular. Mojo is designed for AI-scale performance without abandoning Pythonic ergonomics. In Part 1, we’ll cover the following topics:

Modular, MAX, and Mojo

The problem Mojo addresses in the software industry

The superpowers of Mojo

Mojo’s tooling and ecosystem

Where Mojo stands today

Modular, MAX, and Mojo

Modular is a software company founded by Chris Lattner (of LLVM and Swift fame) and Tim Davies. Its mission is to build a next generation AI developer platform called MAX (short for: Modular Accelerated Xecution), aimed at streamlining and simplifying AI development and deployment. MAX functions more like a framework, built upon the Mojo and Python. In fact, MAX’s core is written entirely in Mojo. The full platform is called the Modular software.

Modular’s business model involves deploying its software in enterprise environments and offering consulting services based on its deep technical expertise. However, The “Mojo Community Edition” which includes the Mojo language and the Max framework will always be open and free to use from their GitHub repo.

Mojo is a new Pythonic system-level language. It adopts most, if not all, of the conventions and keywords used in Python, making it accessible to Python developers. At the same time, it allows lower-level programming using pointers, buffers, and tensors when performance is critical. Modular has committed to open-sourcing the entire stack: the standard library is already open, and the Mojo compiler is expected to be open-sourced in 2026.

Mojo’s development is spearheaded by Chris Lattner and his team at Modular, and the language was first made public in May 2023. The language is designed to deliver speed and adaptability to emerging hardware platforms, all within the Pythonic syntax familiar to AI and ML developers. It does this in two ways:

Although software performance is notoriously difficult to benchmark, Mojo regularly matches and sometimes surpasses the speed of Rust and C++, as we’ll see in later sections.

Mojo is the first programming language that allows developers to target a wide range of hardware—including NVIDIA and AMD GPUs—without switching to CUDA. Unlike CUDA, which only works with NVIDIA GPUs, Mojo provides a unified programming model across platforms.

The problem Mojo addresses in the software industry

Python is an excellent language when it comes to the design and prototype stage of machine learning and AI projects, offering a comfortable syntax and a wealth of supporting tools and libraries. Unfortunately, it’s much less suitable when moving these projects to a production stage, where you need high performance to satisfy customers and reduce costs. For such tasks, you needed, until now, to switch to a different language like C++ or Rust, which offer good low-level performance.

The Mojo language changes this fragmented workflow entirely: with its Pythonic syntax, memory-safety and C++ like performance, Mojo is well suited for both the development and deployment stage of AI or any other projects for that matter.

Python is the number one language used in machine learning and AI projects. Not only that, it is also rated as the most popular language in the Tiobe index and other language popularity rankings.

Python makes prototyping and modelling easy. Its almost pseudocode-like readability, mild learning curve and vast ecosystem account for its immense popularity.

But Python also has its downsides: most importantly, it runs slow and doesn't know how to run code in parallel, wasting all the core-power of current CPU's and GPU's. Many attempts have been made to remedy these problems, none of them fully satisfactory.

Performance in an AI project is crucial. When reaching the deployment stage, engineers often rewrite parts of or the whole Python-project in a low-level systems language, preferably C++. Adding to that difficulty, to get access to GPU's, parts of the model must be rewritten in CUDA, or special accelerator languages for more exotic processors. So, two or three languages are needed, and the project's infrastructure becomes increasingly complex.

There is an urgent need for simplification in this domain. Model development and deployment both must be possible in one high-performant language. Not only that, the language should also be able to work directly with GPU's and other processors.

To remedy this situation, the industry needs a language that is both high-level like Python and low-level like C++ or Rust. This seems impossible, but Chris Lattner and his team at Modular are working on exactly such a language called Mojo. Chris Lattner is most known for the creation of LLVM (Low Level Virtual Machine) and more recently Multi-Level Intermediate Representation (MLIR), alongside Swift, Clang and work on TensorFlow.

LLVM and MLIR are both compiler tool chains: as input they get program code, and as output they generate executable machine code for any processor instruction set. Between input and output, any number of transformation steps can be defined to optimize the final binary code for the target machine. LLVM has revolutionized the programming languages world since 2003, most notably with Julia, Kotlin, Rust and Swift. MLIR is a sub-project of LLVM and aims at making machine code even more adaptable to specific hardware. Both MLIR and LLVM are used in Mojo. Because of this foundation, Mojo is the first programming language that can run on both CPU’s and GPU’s.

An example of Mojo code

Let’s now look at some Mojo code to get a sense of its syntax. The main() function is the typical entry point in a standalone Mojo program.

fn main():

words = List[String]("Mojo", "is", "fire") #1

for ref word in words: #2

word += "🔥" #3

print_list(words) #4

fn print_list(words: List[String]):

for word in words: #5

print(word)Every Mojo program needs a main() function to run, which, like all functions, can either be prefixed with def or fn. A def function is more flexible and Pythonic, fn is stricter and requires type annotations.

Line 1 defines a list of strings, named words. In line 2, we loop through each string word in the list. The ref prefix makes word mutable. Line 3 appends a 🔥 character to each string. Line 4 calls the print_list function, passing it the list. Finally in line 5, we loop through each word again and print each one.

For a Python programmer, this should look very familiar. But even if you don’t know Python, just from looking at the code, you'll probably be able to understand what it does.

The superpowers of Mojo

Mojo is fast, scalable, safe and enables metaprogramming. These seem like hype-terms, but Mojo is designed from the ground up to ensure these goals are met, providing developers with a great systems language with a familiar, readable syntax. In this section, we'll go through some arguments to substantiate these claims.

High performance

Performance comparisons show that Mojo easily surpasses Python, often by a factor of 10 or more, sometimes even by 4 to 5 orders of magnitude, beating the raw speed of C++.

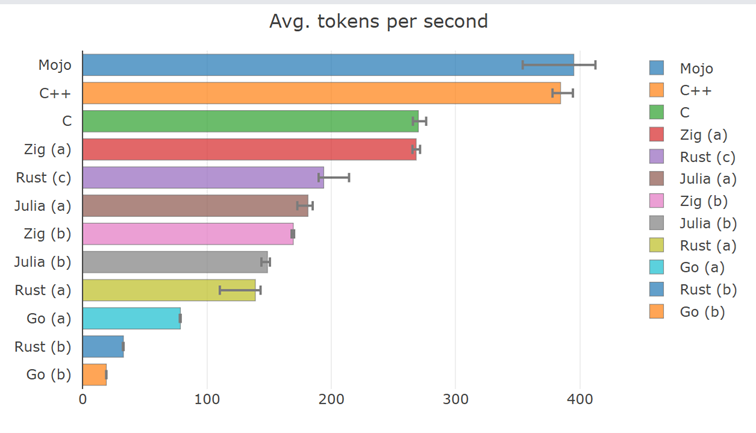

For example, let us take a look at Aydyn Tairov’s port of a Llama2 model, first from C to Python, and then to Mojo (see GitHub repo). Tairov benchmarked the model across 6 other languages under both single-threaded and multi-threaded cases. Mojo consistently performed well. In fact, the following benchmark shows Mojo outperforming C++, C, Zig, Rust, Julia and Go:

Figure 1.1 - Llama2 Inference on Mac M1 Max, multi-threaded [ stories42M.bin ] – Mojo outperforms 6 system languages in average tokens processed per second. Source: Llama2 Ports Extensive Benchmark Results on Mac M1 Max

This is no surprise, because Mojo is built on the latest modern compiler technologies like MLIR. Performance is further enhanced by using explicit types, for example, declaring that a variable num is of type Int (integer).

To understand why this matters, we need to look at Mojo’s compilation model and memory management.

Compilation model

Mojo compiles to machine code specific to the target platform, optimizing for every type in the code. This is done through the fast, next-generation MLIR compiler toolchain, which brings several innovations in compiler internals.

Mojo is the first language built entirely on MLIR. You could think of it this way: Mojo is to MLIR as C is to Assembly.

By translating into MLIR, Mojo automatically benefits from MLIR/LLVM’s execution speed optimizations.

The resulting machine code runs on a high-performance concurrent runtime. “Concurrent” means that the runtime can execute parts of your code in parallel, achieving even higher performance. The compiler itself is written in C++.

Mojo can be compiled in two ways:

Just in Time (JIT) compilation: Used in dynamic Python-like environments such as the Mojo Read Evaluate Print Loop (REPL) or Jupyter notebooks.

Ahead of Time (AOT) compilation: Used when you need a standalone executable for a specific environment, for example in AI deployment.

Python, by contrast, is interpreted by the CPython runtime.

When a Mojo executable runs, it executes binary machine code native to the target system. In JIT mode, the boundary is less clear: portions of code are compiled while code is already executing.

Python and Mojo can talk to each other: Python code embedded in a Mojo application is executed via an embedded CPython interpreter at compile time. You can think of this as CPython talking to Mojo.

Memory management

How memory usage is managed plays a major role in the speed and robustness of a language. Mojo possesses automatic memory management, which means that memory is cleaned up automatically in the most efficient and safe way without developer intervention. Unlike Java, C# or Python, Garbage Collection (GC) is not used in Mojo. As a result, Mojo doesn't suffer from GC pauses and can be used in real-time software. Memory is a precious resource in AI, so this is definitely a significant advantage.

Mojo is also designed for systems programming. It allows manual memory management using unsafe pointers, like in C++ and Rust. This enhances performance but decreases safety: the developer is responsible for managing memory manually when using unsafe pointers.

Scalable and adaptable to new hardware

Running a Python app in parallel is limited by the Global Interpreter Lock (GIL): Python can only execute one thread at a time, even in a multithreaded application. This means it can only use one of your machine's cores.

Mojo has no such limitation. It provides inbuilt parallelization, enabling automatic multithreaded code execution across multiple cores. This allows full utilization of the available CPU.

Through Mojo's LLVM foundation, a whole range of processor instruction sets (like IA-32, x86-64, ARM, Qualcomm Hexagon, MIPS, and so on) can be targeted.

On top of that, MLIR adds a layer of adaptability. That's why Mojo can potentially run on all kinds of hardware types, like CPU’s (x86, ARM), GPUs, TPUs, DPUs, FPGAs, QPUs, custom ASICs, and so on.

Safety: a Python you can trust

Mojo’s type system also improves error checking. Code becomes safer and more reliable, because far fewer problems occur at runtime; many are caught at. compile time instead. This increases confidence in your codebase.

Automatic memory management further improves safety, because it prevents all kinds of memory errors (like segfaults) and memory-leakage, which are a common cause of bugs in C++ applications.

The compiler tracks the lifetime of every variable and destroys its data as soon as it is no longer in use. This is known as eager destruction or As Soon as Possible (ASAP) destruction.

Mojo uses concepts like ownership, borrow-checking, and lifetimes, similar to Rust, but with simpler syntax.

Metaprogramming

Python is renowned for its dynamic and metaprogramming capabilities, but since Python is interpreted, this happens at runtime.

Mojo uses compile-time metaprogramming which comes at zero runtime cost, meaning it does not incur a performance penalty. Moreover, to metaprogram, you just use the same Mojo syntax as for "normal programming".

Here is a simple example that calculates SUM at compile time:

alias SUM = sum(10, 20, 2) #1

fn sum(lb: Int, ub: Int, step: Int) -> Int:

var total = 0

for i in range(lb, ub, step): #2

total += i

return total

fn main():

print(SUM) # => 70 #3

print(sum(10, 20, 2)) # => 70 #4Line 1 defines the alias SUM using the function call sum(10, 20, 2).

The loop in line 2 iterates over the values 10, 12, 14, 16, 18, adding them up to get 70.

The statement in line 3 compiles directly to print(70), because SUM is evaluated at compile time—it is an alias.

In line 4, the same sum is calculated at run time using a direct function call.

Metaprogramming in Mojo also allows for compile-time parameters, written in square brackets []. These can be used in functions or structs. For example:

fn greet_repeat_args(count: Int, msg: String): #1The function in line 1 uses both count and msg as runtime arguments.

fn greet_repeat[count: Int](msg: String): #2The function in line 2 uses count as a compile-time parameter, and msg as a runtime argument.

Mojo’s tooling

A modern programming language needs a full-fledged IDE, with AI code assistance. Mojo has you covered on this front.

The Modular software is installed through the well-known package-manager pixi. The officially supported way for developing Mojo projects is Visual Studio Code with the Mojo plugin (get it at here), complete with LSP server for code completion, docs, function signature help, and so on. Mojo also works well with AI-assisted tools like GitHub Copilot.

The plugin provides for an LLDB debugger, which integrates nicely with the VSCode interface. Mojo has facilities for unit-testing and benchmarking built in.

To get started with a new Mojo project, use the Getting Started tutorial. With pixi you create a Mojo project in a virtual environment, where the package dependencies and environment settings are automatically managed for you. This lets you maintain multiple isolated Mojo projects, each with its own toolchain and packages.

Mojo also uses the same conventions for its module system as Python. Additionally, modules can be pre-compiled into packages, which can then be reused across projects for a performance boost.

Where Mojo stands today

At the time of publication, the latest stable version of Mojo is 25.4, released on Jun 19, 2025. Mojo currently runs on Linux and macOS. On Windows, all features work through Windows Subsystem for Linux (WSL), while a full native port is a mid-term project.

Mojo is being worked on by a large, dedicated team at Modular and by an ever-growing group of external developers (over 260 developers have already contributed to the language). The community is active: the Mojo Discord server has over 22,000 users, and the project has received more than 24,300 stars on GitHub. The community hub, Discord, and forum are great places to connect with other Mojo users and the Modular team.

The Mojo repository is open sourced under the Apache License v2.0. Currently, this applies to the full standard library and all documentation.

Mojo has many features that go beyond Python, even though it currently implements only a subset of Python’s syntax. Notably missing are the global keyword or user defined class types. However, support for even more Python features remains a long-term goal.

Mojo, of course, is not invented in a vacuum. Besides being a member of the Python family, Mojo benefits from insights and lessons learned from languages like Rust, Swift, Julia, Zig, Nim and Jai.

Mojo and the MAX engine currently run on Intel, AMD, and ARM CPUs, specifically:

x86-64 (Intel and AMD, running Ubuntu 20.04/24.04 LTS or other Linux variants)

AWS Graviton3 ARM CPUs

It also supports a range of accelerators:

Mojo GPU kernels for NVIDIA (A100, H100, H200, and any PTX-compatible devices)

AMD MI300X/MI325X

All without needing CUDA.

A big chunk of the language has already reached stability. However, spearheaded by the new revolution in GPU programming, improvements are still being made all the time. A production-ready version is expected in Q1 of 2026.

Although AI is Mojo’s major goal right now, it is not built only for that. Its unique properties make it suitable for projects in areas such as:

Performance critical applications: High-Performance Computing (HPC), scientific/technical computing, data analysis and transformations, and high-quality web and other back-end servers.

Embedded systems: IoT devices, mobile, automotive, or robotics, and hardware-integrated software..

Low-level system programming: Device drivers, firmware, databases and operating system components.

Game Development: Performance-intensive engines, physics simulations, and real-time graphics. AAA games need blazing performance and low-level control. Mojo offers both.

Cryptography and Security-related applications: Secure communication systems, encryption algorithms, and cryptographic protocols.

Other kinds of applications: CLI tools, database extensions, editor plugins, graphical native desktop UI's, and so on.

A glimpse of what is possible can be found in the Mojo community project list at awesome-mojo.

Community-built libraries include numerical tools (e.g., numojo, decimojo) and networking stacks (e.g., lightbug), alongside efforts in bioinformatics and high-energy physics.

In upcoming articles, we’ll explore more of what makes Mojo unique in depth::

Compile-time metaprogramming in Mojo

Low-level memory operations in Mojo

GPU programming with Mojo

© 2025 Ivo Balbaert. All rights reserved.

mojo core is not open source. until this happens, very few people will invest in learning and adopting it